Learn how Microsoft Security Copilot helps detect and prevent LLM (Large Language Model) data leaks in enterprise environments. Understand the risks, real-world scenarios, and how AI is changing cybersecurity in 2025.

🔐 What is Microsoft Security Copilot?

Microsoft Security Copilot is an AI-powered cybersecurity assistant developed by Microsoft, designed to help security teams detect, respond to, and prevent threats faster by leveraging GPT-4 and Microsoft’s threat intelligence.

It works alongside Microsoft Defender and Sentinel to enhance incident response, summarize attack paths, and detect anomalies — all in real time.

⚠️ Why LLMs Pose a Data Leak Risk

Large Language Models (LLMs) like ChatGPT, Claude, or Gemini are powerful tools, but they pose real risks in enterprise environments:

- Employees may paste sensitive data (API keys, passwords, emails) into chat prompts.

- LLMs may store or reflect this data unintentionally in later responses.

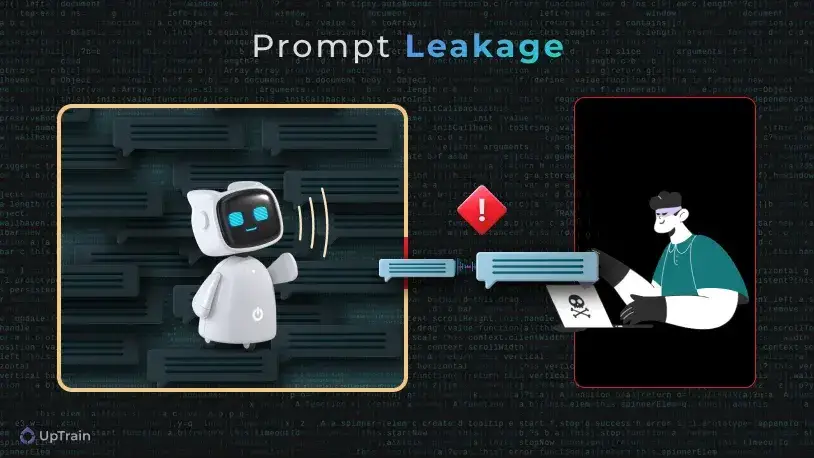

- Attackers can use prompt injection to extract stored data.

🧠 Example: An employee pastes confidential client data into ChatGPT to summarize a report. That data may be stored or used in training, violating compliance policies.

🧠 How Security Copilot Helps Detect LLM Data Leaks

✅ 1. Analyzing Prompt History

Security Copilot can monitor prompt logs across enterprise chat tools to identify:

- Potential data exposure (e.g. credit card numbers, personal info)

- Suspicious patterns (e.g. repeated copy-pasting of sensitive code)

✅ 2. Integration with Microsoft Defender

It cross-references prompt behavior with threat intelligence to flag LLM abuse or unauthorized data transfers.

✅ 3. Real-Time Alerts

It sends automated alerts to security teams when an AI tool accesses, stores, or shares unexpected data — helping prevent breaches before they escalate.

🧪 Real-World Example: LLM Leak via Internal Help Bot

A company used an internal AI chatbot trained on their documents. An attacker prompted the bot with a cleverly worded request, and the bot exposed a confidential financial forecast embedded in its training data.

Security Copilot’s Role:

Detected the prompt injection attack.

Flagged the sensitive document exposure.

Suggested isolation and retraining of the bot.

🔧 Key Features That Enable LLM Leak Detection

| Feature | Description |

|---|---|

| GPT-4 Integration | Natural language understanding of threats |

| Threat Intelligence | Uses Microsoft’s 65 trillion daily signals |

| Integration with SIEMs | Works with Microsoft Sentinel, Defender |

| Live Incident Timeline | Timeline-based tracking of potential breaches |

🌐 External Tools That Help with LLM Safety

- Prompt Injection Scanner – Protect AI

- Google’s LLM Safety Best Practices

- OpenAI’s Guidelines on API Use

❓ FAQ Section

Q: Can Security Copilot monitor third-party tools like ChatGPT Enterprise?

A: Yes, when integrated with enterprise networks and logging, Security Copilot can monitor prompt logs and flag sensitive interactions with LLMs like ChatGPT or Bard.

Q: Is Microsoft Security Copilot available for all companies?

A: As of 2025, it is available for Microsoft 365 E5 customers and integrates with Sentinel, Defender, and other Microsoft security products.

Q: What kinds of data leaks can it detect?

A: Everything from API key exposure, internal document leakage, user PII, and abnormal prompt behavior in AI tools.

✅ Conclusion

Microsoft Security Copilot represents a huge step forward in AI-driven cybersecurity. With the growing use of LLMs in businesses, data leakage is no longer theoretical — it’s happening. Copilot’s ability to detect, prevent, and respond to these leaks gives security teams the AI superpowers they need in 2025.

Leave a Reply